10 questions on early literacy and speech tech

July 22, 2022

The “house” was packed for our recent webinar “New voice features to power early literacy,” where we demoed new speech technology features that spot, capture, and analyze children’s short utterances, such as letter names and letter sounds.

Who knew the topic of voice-powered early literacy could be so hot? We did, and so did our webinar attendees, which included education companies, teachers, district leaders, literacy advocates, and many others.

Missed the webinar? Looking to learn more about how SoapBox’s speech technology powers early literacy activities such as phoneme isolation, blending, manipulation, as well as custom words and pronunciations for students in grades PreK-3? We’ve got you covered! Here’s the recording.

And now, here are the top 10 audience questions from the webinar, complete with answers.

10 questions on voice-powered early literacy

1. How does SoapBox filter out irrelevant background noise?

Our speech models are built to understand children’s speech in real-world noisy environments — without the need for headsets and mics.

We also test in noisy environments — classrooms, living rooms, etc. — with signal-to-noise ratios (SNRs) of 10 to 20 decibels (dB).

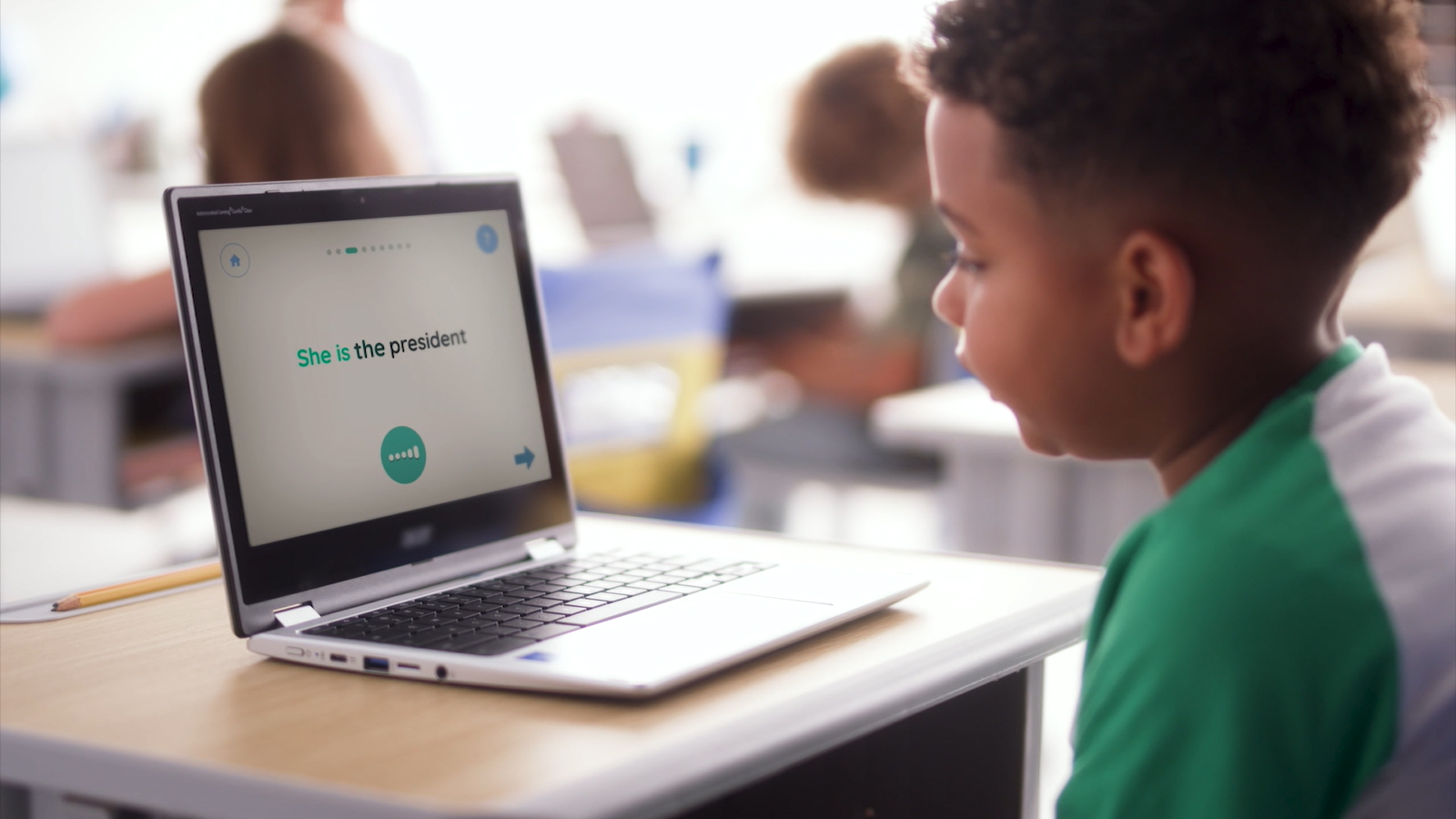

Here’s five-year-old Aku practicing his English in a noisy playroom on our client Lingumi’s English language learning (ELL) platform:

2. How quickly does SoapBox process children’s audio?

For short utterances, such as phonemes, letter names, or words, our system responds within 500 milliseconds.

For longer utterances, such as passages of text, response times depend on the length of the audio file (e.g., about 20 seconds to process a 60-second audio file).

3. What languages are supported by SoapBox’s phoneme-level scoring feature?

Most of our clients are starting their voice journey with English, but our voice engine is language agnostic, allowing us to work with other languages on a case-by-case basis.

We also have Spanish, Portuguese, and Mandarin products in beta, so watch our blog for updates on when these will be launched to the market.

4. How does SoapBox handle differing acceptable pronunciations where a phoneme can have many different allophones?

We build our speech models to understand and accept different pronunciations of words and phonemes from children all over the world. Take the letter T, for example. We’ve trained our speech models on all the ways the T sound is pronounced (e.g., glottal stop, aspirated, unaspirated, etc.), occurring at the beginning, middle, and end of words.

So whether a child pronounces the word bottle as [b ɔ t ə l] or [b ɒ ʔ ə l] (with the glottal stop), for example, our voice engine will accept both pronunciations.

5. Is SoapBox as accurate as a teacher or human assessor?

Yes, and proven so. In-house testing and independent benchmarking by researchers and clients like Imagine Learning, McGraw Hill, Amplify, MetaMetrics, Lingumi, and others have found that our voice engine scores children’s speech as accurately as a trained human assessor.

For example, in Imagine Learning’s three-month pilot, SoapBox scored “oral reading fluency and language artifacts with a level of accuracy comparable to that of experienced educators in the classroom.”

6. Can the voice data SoapBox returns be reported at the class level?

Yes it can. As the voice provider, we send an analysis of students’ speech to our clients, and they decide how that voice data is surfaced (e.g., at the individual, class, school, or district level), as well as who the data is surfaced to (e.g., students, teachers, or schools).

7. What are some examples of front-end (student- or teacher-facing) platforms that are powered by SoapBox’s voice engine?

Education companies like McGraw Hill, Amplify, and Imagine Learning are already using our speech technology to power their learning tools and literacy programs to help kids become successful readers.

This blog walks through how McGraw Hill are integrating our speech technology into their Reading Mastery program, and includes images of a student view and teacher view of an oral reading assessment.

Check out the Blog and Resources sections of our website for more insights into our clients and the products we’re powering for them.

8. Does SoapBox have sample codes, such as GIT repositories, for front-end developers who are testing your speech technology for a POC?

SoapBox Labs’ APIs are built to deliver functional integrations quickly and easily.

We use standard, RESTful APIs front-end engineers will be familiar with. We also use straightforward URLs, HTTP response codes, and standard HTTP features, which are understood by HTTP clients. So once a third-party application can make HTTP requests, then they have what they need to integrate with SoapBox’s services.

We provide clients with developer documentation to support their integration experience, and access to our Confluence ticketing system for support queries. We also offer them access to our SoapBox Studio consulting service, where they can get technical integration advice from our speech technology experts.

Get started by telling us a bit about your product and we’ll be in touch to discuss your use case further.

9. Could SoapBox’s custom word capability be used for identifying code switching in a child’s storytelling?

Great question! Using our custom word markup, clients can specify their own custom words or custom pronunciations for any word. It can be used for a word in any language as long as you can approximate it with our phoneme set.

This is very useful for code switching, especially for underserved languages that don’t have a lot of speech technology resources.

10. In what ways can SoapBox’s speech technology be used in the future for play-based interactivity?

Voice is the most natural interface for all communications, including more immersive learning and play experiences — especially when it comes to kids! SoapBox envisions a near future where voice — AKA speech technology — plays a major role in gaming, interactive TV, and the metaverse.

- Gaming: To increase accessibility and reduce friction in gameplay. Speech technology can also help to keep children safe when gaming by moderating for toxic behavior and language.

- Media: With features like voice command and voice control, voice AI delivers interactive conversational storytelling, promoting longer and more immersive engagement between kids and their favorite characters, whether on TV or in a theme park!

- Smart toys: According to Mattel, the most common request from kids who love Barbie is to be able to talk to her. The reasons for this, we believe, are obvious: to offer kids more magical BFF experiences!

In this video, six-year-old Noah shows how using his voice shapes his entire day, from learning to playing to organizing to building his confidence:

Download our Powering Phonemic Awareness product sheet

Our Phonemic Awareness product sheet introduces our off-the-shelf features for foundational early childhood literacy skills. Download your free copy to learn how you can use these features to power literacy assessment and kids’ independent reading practice.

Ready to add speech technology to your education products?

Let us help you voice-enable and automate your learning tools.

Tell us more about your product on our Get Started form, and we’ll be in touch to learn more about your use case and introduce you to our speech recognition technology for kids.